Essential Building Blocks for Data Centres

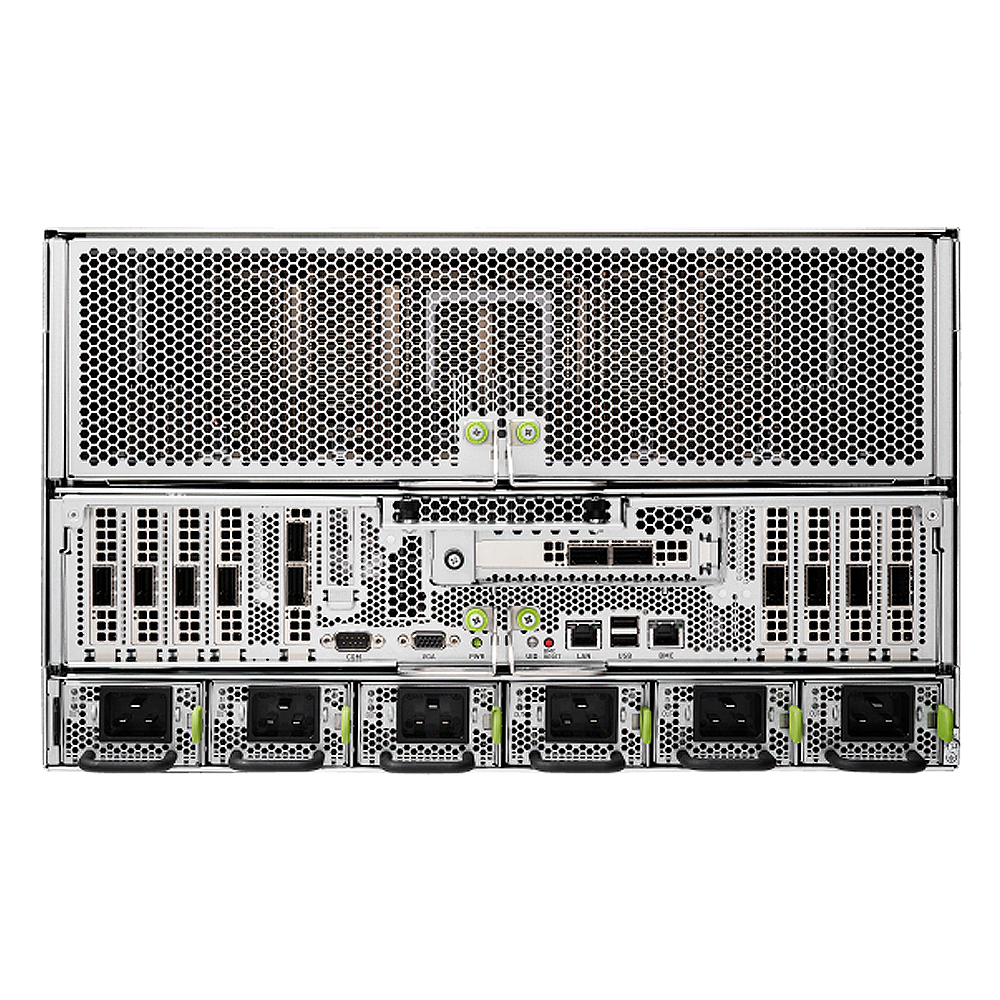

The AMAX NVIDIA DGX A100 is an essential building block for a data centre. It is a universal system for all AI workloads, offering unprecedented compute density, performance, and flexibility in a 5 petaFLOPS AI system. NVIDIA DGX A100 features the world’s most advanced accelerator, the NVIDIA A100 Tensor Core GPU, enabling enterprises to consolidate training, inference, and analytics into a unified, easy-to-deploy AI infrastructure that includes direct access to NVIDIA AI experts.

The Challenge of Scaling Enterprise AI

Every business needs to transform using artificial intelligence (AI), not only to survive but to thrive in challenging times. However, the enterprise requires a platform for AI infrastructure that improves upon traditional approaches, which historically involved slow computing architectures that were siloed by analytics, training, and inference workloads. The old approach created complexity, drove up costs, constrained the speed of scale, and was not ready for modern AI. Enterprises, developers, data scientists, and researchers need a new platform that unifies all AI workloads, simplifying infrastructure and accelerating ROI.

The Universal System for Every AI Workload

DGX A100 sets a new bar for compute density, packing 5 petaFLOPS of AI performance into a 6U form factor, replacing legacy compute infrastructure with a single, unified system. The AMAX NVIDIA DGX A100 also offers the unprecedented ability to deliver fine-grained allocation of computing power, using the Multi-Instance GPU capability in the NVIDIA A100 Tensor Core GPU, which enables administrators to assign resources that are right-sized for specific workloads. This ensures that the largest and most complex jobs are supported, along with the simplest and smallest. Running the DGX software stack with optimised software from NGC, the combination of dense compute power and complete workload flexibility makes DGX A100 an ideal choice for both single-node deployments and large-scale Slurm and Kubernetes clusters deployed with NVIDIA DeepOps.

- 8X NVIDIA A100 GPUS WITH UP TO 640 GB TOTAL GPU MEMORY

12 NVLinks/GPU, 600 GB/s GPU-to-GPU Bi-directonal Bandwidth. - 6X NVIDIA NVSWITCHES

4.8 TB/s Bi-directional Bandwidth, 2X More than Previous Generation NVSwitch. - 10x MELLANOX CONNECTX-6 200Gb/S NETWORK INTERFACE

500 GB/s Peak Bi-directional Bandwidth. - DUAL 64-CORE AMD CPUs AND UP TO 2 TB SYSTEM MEMORY

3.2X More Cores to Power the Most Intensive AI Jobs. - Up to 30 TB GEN4 NVME SSD

50GB/s Peak Bandwidth, 2X Faster than Gen3 NVME SSDs.

Reviews

There are no reviews yet.